Feelings are often paradoxical in nature. Sometimes the result is called hypocrisy, sometimes merely confusion or stupidity. Being something of a software evangelist I always try to convince people to switch to greener pastures, although my ideas of what is better are not always consistent with what is commonly accepted. For example, I would not try to persuade someone to use Linux, because although the OS appeals to my sense of geekiness and my inherent attraction to free, communal efforts (read: open source), my experience with Linux has all but discouraged me from using (and certainly recommending) the system. That said, when I find something I like and appreciate I certainly stick by it and try to evangelize it to the best of my abilities.

So why was I utterly pissed off when, clicking on this link via a blog post I read using RSSOwl (ironically, another great open source program), I was presented with a large "Please update your web browser!" box right at the top of the website urging me to "upgrade" to Firefox/Safari/Opera/whatever?

I'll tell you why. Because in-your-face evangelism pisses me off. Ilya, a friend of mine, introduced me to Firefox when it was still Firebird 0.5 alpha, and I've been using it ever since. I've been telling about it to everyone who'd listen; I've recommended it to friends, family, colleagues and even just random people I have occassionaly found myself talking tech with. Back in May, when I remade my personal website into a blog, I've designed it for CSS compliance and tested with Firefox first, IE second (and found quite a few incompatibilities in IE in the process). I've placed a "Take Back the Web" icon right under the navbar, in hope that perhaps a casual IE user would stumble upon it and wonder what it's all about. But I don't nag, or at least try very hard not to. I consider people who visit my website as guests, and just as I wouldn't shove my goddamn tree-hugging, vegetarian, free-love, energy-conservation way of life* under your nose if you came to visit my house I wouldn't want you to feel uncomfortable when you enter my website. Which ToastyTech did.

My open-source hippie friends, do us all a favor and take it easy. This sort of thing will not win people over to your cause; at most they'll just get pissed off and avoid your sites altogether. A regular joe stumbling onto spreadfirefox.com (or, for that matter, slashdot) would be just as likely to return to it as Luke would to the "wretched hive of scum and villainy" that is Mos-Eisley.

* In case you were wondering, I'm no tree-hugging hippie, I'm not a vegetarian, my love is an equal-trade business and I drive a benzine-powered vehicle the same as everyone else. But I care.

So I have to update a bunch of documents, primarily that 6mb pile of text, graphs and objects spanning about 110 pages I mentioned last time. My last experience working on it with Writer (from the OpenOffice.org 2.0 suite) was a blast, so despite the bigger assignment I figured that it would be a good learning experience.

The template the document was originally based off of is apparently

very ill-conceived because, upon further examination, I noticed some

issues that had nothing to do with the document import (for example,

the headers were completely handcoded - no autotext or field usage

etc.) In the process of reworking parts of the template, namely the

table of contents and headers, I had to learn a bit about Writer and

would like to share some insights.

First of all, unlike Word (I use 2003), there's no "edit header"

mode; you differentiate between the header and the rest of the page by

looking at the box outlines (gray by default). When you edit the page

header in Word it actually changes editing mode: the rest of the page

is grayed out and it's very obvious that you're editing the header

itself. I'm not sure which approach I favor better, although I am

inclined to go with Word (because it makes it that much more obvious

what you're doing at any given time). That said, the Writer interface

for fields is more powerful, or at the very least far more intuitive,

than Word's. The interface for working with headers is also much more

powerful than in Word, although it did take me a little while to get

the hang of it; I had to get over some preconceptions from years of

working with Word [since version 6 on Windows 3.1!] and spend a couple

of minutes using OpenOffice's sparse but surprisingly effective help.

Writer lets you associate each page with a particular "Page Style" and,

consecutively, each Page Style with its own Header and Footer. Not only

that, it lets you use define (per Page Style) a different header/footer

for odd/even pages (in case you're authoring a double-sided printed

document). Once you get the hang of it, defining and using headers is a

blast.

Editing headers in OpenOffice (left) vs. Microsoft Word (right)

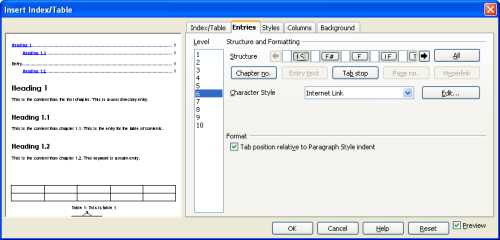

Creating and using indexes and tables (in the classic sense, not

graphical tables) with Writer is a lot more powerful than in Word; it

gives you a somewhat less-than-intuitive but extremely powerful GUI for

setting the structure of each entry in the table/index. I used this

feature for creating a Table of Contents in the document; being the

tidy tight-ass that I usually am the document has a very clearly

defined outline (same concept as in Word: headings, body text etc.)

which made for an extremely easy transition into a table of contents.

The process is easier in Word - just add the Outlining toolbar to your

layout and click on Update TOC:

It's not really any more complicated in Writer, just slightly less

intuitive. From the menu select Insert->Indexes and

Tables->Indexes and Tables, choose Table of Contents and you're

done. When it comes to customizing the table of contents, though, Word

is practically useless; in fact, it took me a couple of minutes of

going over menus just to come to the conclusion that I can't remember

how to access the customization dialog. While on the subject, Word

manages to obfuscate an amazing number of tools, power tools and

necessary functionality behind a convoluted system of toolbars, menus,

wizards and dialogs to the point where it's impossible to find what

you're looking for. Writer to Word is (not nearly, but getting there)

what Firefox is to

Internet Explorer as far as usability is concerned: immediately

lightweight, does what you want out of the box and when you DO need

something nontrivial it's much easier to find (Insert->Header as

opposed to View->Header and Footer, for example. Why the hell would

I look for something like that in View?). Formatting in general is much

more convenient in Writer; for example, you can very easily select a

section of text and set it to default formatting with Ctrl+0, which you

could theoretically do with Word, but only after spending a lot of time

customizing it. There's no easy way to do it out of the box without a

lot of unnecessary and inconvenient fiddling with the mouse. It would

be interesting to examine Office 12, supposedly Microsoft went to great

lengths to improve usability.

But I digress. Back to table of contents: unlike Word, the default

Table of Contents in Writer does not hyperlink to the various sections

of the document. In light of the immediacy of the floating Navigator

toolbox in Writer (which Word sorely lacks) it is simply unnecessary.

However, since the document will eventually be exported to Word this

was necessary. It took another few minutes of searching, but ultimately

the answer was completely obvious: right-click the Table of Contents,

click on Edit Index/Table and simply customize the structure of the

entries via the Entries tab. The process could use some improvement,

though: there is no way to change the structure for more than one level

at a time which is quite frustrating, and modifications to the

structure are not intuitive (for example, I couldn't figure out why the

hyperlinks wouldn't show, and then figured that I probably need to also

insert an "end hyperlink" tag to the structure. This would completely

baffle a non-technical end-user).

Customizing an index/table with Writer: powerful but not intuitive

So far, though, the experience has been a resoundingly positive one.

There's always room for improvement, but if at version 2.0

OpenOffice.org has already managed to supersede Word as my favorite

word processor Microsoft have some serious competition on their hands.

As part of an ongoing OpenGL implementation project, I was tasked with implementing the texture environment functionality into the pipeline (particularly the per-fragment functions with GL_COMBINE). To that end I had to read a few sections of the OpenGL 1.5 specification document, and let me tell you: having never used OpenGL, getting into the "GL state of mind" is no easy task to begin with, but made far worse when the specifications are horribly written.

To begin with, there is absolutely no reason why in 2005 a standard that is still widely used should be published solely as a document intended for printing. Acrobat's shortcomings aside, trying to use (let alone implement!) a non-hyperlinked specification of an API is the worst thing in terms of usability I've had to endure in recent years (and yes, I was indeed around when you could hardly get any API spec let alone in print. I don't think it matters). Even Borland knew the importance of hyperlinked help with their excellent IDEs of the early '90s. Why is it that so late into the game I still run across things like the spec for TexEnv2D, which describes only some of the function's arguments and then proceeds to tell you that "the other parameters match the corresponding parameters of TexImage3D"? I can only imagine the consternation of someone trying to figured out the spec from a book having to flip all the way to the index at the end to see that the TexEnv3D function appears on pages 91, 126, 128, 131-133, 137, 140, 151, 155, 210 and 217 (the function definition is at page 126, by the way.)

Hey, SGI! I have a four-letter acronym for you. H-T-M-L. It's been here for a while and is just as portable as PDF (and certainly more lightweight.)

Technical issues aside, the spec itself is simply incomplete; there is no obvious place which tells you where, for example, you have to set the OPERANDn_RGB properties; in fact, if you followed the spec to the letter, the people using your implementation would not be able to set these properties at all because the spec for the TexEnv functions explicitly specifies the possible properties, and those properties are not among them. You have to make educated guesses (it does makes sense that you'd set the texture unit state from TexEnv), do "reverse-lookup" in the state tables at the very end and try to figure out from the get function which set function is responsible for the property or dig up the MESA sources and try to figure out how they did it (because it's most likely to be the right way of doing things. I would go as far as to say that MESA serves as an unofficial reference for the OpenGL API). Ideally you would do a combination of the above just to make sure that you are compliant, but the spec will not be there to help you.

Finally, the spec makes problematic assumptions about your background. I think it's a little naïve to assume that anyone who reads the OpenGL background has a strong background in OpenGL programming, or in developing 3D engines. I actually do have some background in 3D engine development and it still took me a few hours going over the chapter again and again (and trying to infer meanings from code examples on newsgroups) to understand precisely what "Cp and Ap are the components resulting from the previous texture environment" means. In retrospect it seems obvious, particularly in light of some clues littered about, that they were referring to the result of the function on the previous texture unit, however that would only be really obvious to someone in the "GL state of mind," which at the time I was not. Tricky bits of logic should be better explained, examples (and particularly visual aids such as screenshots!) should be given. But the OpenGL spec has absolutely none of that.

So bottom line, specs are absolutely necessary for APIs; make sure you have people who proofread them, and put the developers that are most customer-savvy on the job. It would be the single worst mistake you can make to put a brilliant, productive and solitary developer on the task because you would frustrate them beyond belief and the end-result would be of considerably inferiour quality.

I've updated the remote logging framework with a bugfix; if you're using it (or curious to see how it works/what it can be used for) feel free to download it and have a look.

I've also started hacking away at the PostXING v2.0 sources in my spare time, which unfortunately is very sparse at the moment. If anyone has tricks on how to get through HEDVA (differential/integral mathematics, A.K.A infinitesemal calculus outside the Technion...) successfully without commiting every waking moment, do share.

So I got to see a bunch more movies lately (right now it's the only

thing keeping me sane in the face of the dreaded Technion HEDVA/1t

course). Here's a brief review on each:

- Ridley Scott's Kingdom of Heaven is a rather belated attempt to capitalize on the "psuedo-historical epos" trend of the last few years which was ironically started by Scott's own Gladiator (in case you were wondering, Braveheart

was not a little too early for its own good, having come out in 1995).

Synopsis: Balian, the son of Lord Godfrey, is knighted, takes up the

task of defending Jerusalem from the forces of Saladin and ends up

saving the day etc. Throw in a bunch of clichés about love, what it

takes to be a humane leader back in the crusades and some generic

morality crap and you've got a seriously badly written film. In its

defense it does feature some superb photography, OK action and a huge

cast consisting of some favourite actors of mine: Orlando Bloom (who

finally appars capable of acting), Alexander Siddig (of DS9

fame), Brendan Gleeson, Jeremy Irons, Edward Norton (who does not

appear visually), David Thewlis and finally Liam Neeson, who isn't

actually on my list but does deserve a mention. Too bad the movie just

plain sucked.

- Nicolas Cage's latest movie Lord of War

was an absolute blast. It is an amasingly cynical, mostly funny and

quite surprising satire of what makes third world countries wage war,

as well as what makes greedy people tick. It doesn't make any excuses

for the clichés it employs, and in my opinion it hits the spot

precisely: some messages simply do not come across until you bang them

into someone's head with an allegorical hammer. My only issue with it

is that it fails to keep the satire simply as that, and culminates in a

verbal political message which is an exercise in redundancy.

- Instead of making a long point I'll skip to the very end with a summary of The Exorcism of Emily Rose:

good acting (particularly by Laura Linney and Tom Wilkinson),

reasonable dialog, crappy predictable storyline and no boobs at all. In

short, a movie not worth your time; if you're interested in the

legal/judicial aspects do yourself a favour and go watch Law &

Order, it's what they do.

- I've heard a lot of conflicting opinions on I, Robot. Maddox went as far as to say:

Here's how I would have changed this film: start out with a

shot of Will Smith in a grocery store buying a 6 pack of Dos Equis

beer, except instead of paying, the cashier is a Dos Equis marketing

rep who hands Smith a thick wad of bills. Next shot: Smith finishes the

last of the beer, walks over to Isaac Asimov's grave and lets loose.

Why not? Same message, none of the bullshit.

I won't deny that this movie has some serious issues (particularly with product placements; here it was even worse than The Island, if that's even possible) but I would take an opposite view to Maddox's: I enjoyed the movie immensely. Having read the book

quite recently I find that the only place where the movie deviated

completely from what is detailed in the stories (because I, Robot is

not a single coherent storyline, rather comprised of several short

tales) is in the depiction of Susan Calvin's character, who is actually

very true-to-form in the beginning of the movie. Regardless, the movie

features rather imaginative photography, some great action sequences, a

plot which ultimately doesn't suck and some of the funniest dialogue

I've heard in years (the only competition comes from the underrated Constantine). Bottom line? Recommended.

Chris Frazier has been kind

enough to let me in on the PostXING v2.0

alpha program. I tried PostXING v1.0 ages ago and it struck me as an open-source

program with lots of potential but (like many other open source projects) not

nearly ready for prime-time. I very much hope to be able to help Chris make v2.0

a practical contender to my regular blogging tool, w.bloggar. It certinaly seems on the right

track, and as you can see is already reasonably usable.

If anyone has anything they wished for in a blogging tool, let Chris know -

he may yet make it worth your while

Despite a lot of work by various people (including, but not limited to, Jeff Atwood at CodingHorror.com and Colin Coller), creating syntax-highlit HTML from code with Visual Studio is still extremely annoying; you don't get the benefit of background colours (as explained by Jeff), you face bizarre issues (try Jeff's plugin on configuration files for instance) and finally, ReSharper-enhanced syntax highlighting can not be supported by either of these solutions.

I've submitted a feature request to the JetBrains bugtracker for ReSharper; since I regard ReSharper as an absolute must-have and it already does source parsing and highlighting, features powerful formatting rules and templates etc., I figure it's the definitive platform for such a feature. Read the FR and comment here; I reckon if it gets enough attention they probably will implement it in one of the next builds.

As a side-note, I'm still keeping the ReSharper 2.0 EAP post up-to-date on the latest builds. Make sure to have a look every now and then.

Introduction

Update (27/11/2005, 15:40): After putting the remote logging framework to use on an actual commercial project I've found that the hack I used for mapping a method to its concrete (nonvirtual) implementation wasn't working properly for explicit interface implementations. One horrible hack (which fortunately never saw the light of day) and a little digging later I've come across a useful trick on Andy Smith's weblog and added some special code to handle interfaces. The new version is available from the download section.

Introduction

As part of the ongoing project I'm working on I was asked to implement a sort of macro recording feature into the product engine. The "keyboard" (as it were) is implemented as an abstract, remotable CAO interface which is registered in the engine via a singleton factory. I figured that the easiest way to record a macro would be to save a list of all remoting calls from when recording begins to when recording ends; the simplest way would be to add code to the beginning of every remote method implementation to save the call and its parameters. Obviously, that is an ugly solution.

About a year ago when I was working on a different project (a web-service back end for what would hopefully become a very popular statistics-gathering service) I faced a similar issue: the QA guys asked for a log of every single Web Service method call for performance and usability analysis. At the time I implemented this as a SOAP extension which infers some details using Reflection and saves everything to a specific log (via log4net). A couple of hours of research, 30 minutes of coding and I was done - the code even worked on the first try! - which only served to boost my confidence in my choice of frameworks for this project. My point is, I figured I would do something along the same lines for this project, and went on to do some research.

If you're just interested in the class library and instuctions skip to instructions or download.

Hurdles ahoy

I eventually settled on writing a Remoting server channel sink to do the work for me. I'll save you the nitty-gritty details; suffice to say that the entire process of Remoting sink providers is not trivial and not in any way sufficiently documented. There are some examples around the internet and even a pretty impressive article by Motti Shaked which proved useful, but didn't solve my problem. I eventually got to the point where I could, for a given type, say which methods on that type were invoked via Remoting and with which parameters, but I couldn't tell the object instance for which they were invoked no matter what. A sink may access the IMethodMessage interface which gives you a wealth of information, including arguments, the MethodBase for the method call etc., but the only indication of which object is actually being called is its URI (the interface's Uri property). Unfortunately I couldn't find any way of mapping a URI back to its ObjRef (something like the opposite of RemotingServices.GetObjectUri), only its type.

After a long period of frustrating research and diving into CLR implementation with the use of Reflector I came to the conclusion that the only way to convert the URI back to an object (outside of messing with framework internals via Reflection) would be to implement ITrackingHandler and maintain a URI-object cache. Another annoying hurdle was that the framework internally adds an application ID to its URIs and forwards the calls accordingly; for example, connecting to a server at URI tcp://localhost:1234/test would in fact connect to the object at local URI /3f4fd025_377a_4fda_8d50_2b76f0494d52/test. It took a bit of further digging to find out that this application ID is available in the property RemotingConfiguration.ApplicationID (obvious in retrospect, but there was nothing to point me in the right direction), which allowed me to normalize the incoming URIs and match them to the cache.

Finally, inferring virtual method implementations and URI server types are relatively costly operations, so I've added a caching mechanism for both in order to cut down on the performance loss. I haven't benchmarked this solution, but I believe the performance hit after the first method call should be negligible compared to "regular" remoting calls.

How to use this thing

Obviously the first thing to do would be to download the source and add a reference to the class library. I've built and tested it with Visual Studio 2003; I'm pretty confident that it would work well with 2005 RTM, and probably not work with 1.0 or Mono (although I would be delighted to find out otherwise, if anyone bothers to check...)

Next you must configure Remoting to actually make use of the new provider. You would probably want the logging sink provider as the last provider in the server chain (meaning just before actual invocation takes place); if you're configuring Remoting via a configuration file, this is very easy:

<serverProviders>

<!-- Note that ordering is absolutely crucial here - our provider must come AFTER -->

<!-- the formatter -->

<formatter ref="binary" typeFilterLevel="Full" />

<provider type="TomerGabel.RemoteLogging.RemoteLoggingSinkProvider, RemoteLogging" />

</serverProviders>

Doing it programmatically is slightly less trivial but certainly possible:

Hashtable prop = new Hashtable();

prop[ "port" ] = 1234;

BinaryServerFormatterSinkProvider prov = new BinaryServerFormatterSinkProvider();

prov.TypeFilterLevel = TypeFilterLevel.Full;

prov.Next = new TomerGabel.RemoteLogging.RemoteLoggingSinkProvider();

ChannelServices.RegisterChannel( new TcpChannel( prop, null, prov ) );

Now it is time to decide which types and/or methods get logged. Do this by attaching a [LogRemoteCall] attribute; you can use this attribute with classes (which would log all method calls

made to instances of that class) or with specific methods:

[LogRemoteCall]

public class ImpCAOObject : MarshalByRefObject, ICAOObject

{

public void DoSomething() ...

}

class ImpExample : MarshalByRefObject, IExample

{

// ...

[LogRemoteCall]

public ICAOObject SendMessage( string message ) ...

}

Next you should implement IRemoteLoggingConsumer:

class Driver : IRemoteLoggingConsumer

{

// ...

public void HandleRemoteCall( object remote, string method, object[] args )

{

Console.WriteLine( "Logging framework intercepted a remote call on object {0}, method {1}",

remote.GetHashCode(), method );

int i = 0;

foreach ( object arg in args )

Console.WriteLine( "\t{0}: {1}", i++, arg == null ? "null" : arg );

}

}

Finally you must register with the remote call logging framework via RemoteLoggingServices.Register. You can register as many consumers as you like; moreover, each consumer can be set to receive notification for all remotable types (for generic logging) or a particular type (for example, macro recording as outlined above).

// Register ourselves as a consumer

RemoteLoggingServices.Register( new Driver() );

Download

Source code and examples can be downloaded here. Have fun and do let me know what you think!

I haven't had much time on my hands lately, what with buying a car,

moving into a new place (a nice large flat in Haifa), work and starting

university (at the Technion).

Coupled with the fact that I've stopped working full-time and that I'm

eagerly awaiting the new Visual Studio 2005 (due to come out on

November 7th), the direct result is a very low rate of posting lately.

Despite all of the above, I've managed to snag a few hours of gameplay and have a few comments to make:

- The F.E.A.R demo was

absolutely terrific. Admittedly my (now two year-old) machine is no

match for the souped up 3D engine, but it still managed perfectly

playable (>40) framerates at 800x600 at very high detail levels.

This will not do so I expect to buy a new console/machine pretty soon

(probably the latter, I'm not very fond of consoles), but regardless

the hour or so of gameplay featured in the demo was very satisfying

indeed. The graphics are absolutely top-notch and the bullet-time

effect is finally something to write home about (although it seems more

like a last-minute addition than a feature based in solid design) and

the gameplay is very good indeed.

- I also played the Serious Sam 2 demo

and enjoyed it quite a bit. It's not as slick and tongue-in-cheek as

the first game was, but the 30-minute-odd level was very fun indeed.

This time, however, the 3D engine is anything but revolutionary; it's

decent enough, but not quite as fast and not quite as good looking as

some of its competition (F.E.A.R, Doom 3, Half-Life 2 come to mind). My

laptop (Dothan 1.7GHz, Radeon 9700 Mobility) couldn't handle more than

medium detail at 1024x768; considering that the game isn't really

visually groundbreaking (the laptop handles HL2/Doom 3 fluently) this

isn't very encouraging.

- Half Life 2: Lost Coast

is out and kicks a lot of ass. Aside from the top-notch map design

(which isn't annoying, stupid or frustrating like some parts of HL2

itself), the new HDR mode is absolutely stunning. On my gaming machine

(same one that couldn't handle F.E.A.R...), as long as I don't run with

AA everything is very smooth and looks beautiful.

There is also

a nifty commentary feature which allows you to hear (on demand) audio

commentary by the team responsible for the game. On the negative side,

Source is still a horrible mess as they haven't fixed the millions of

caching and sound issues, and the loading times are dreadful to boot.

Plus, 350MB for a demo based on pre-existing resources seems a bit much.

- I had amazingly high expectations from Indigo Prophecy

considering all the hype. To make a very long story short, it got

uninstalled about 5 minutes into the tutorial. The controls are horrible,

horrible to the point where I couldn't figure for the life of me what

the hell the tutorial wants with me. Aside from the already convoluted

interface, the tutorial at some point wants to "test your reflexes"; it

does this by showing you a sketch of a D-pad controller (I guess the

game was originally devised for consoles...), and at the opportune

moment one of the controller buttons lights up and you have to press

the same button on your actual controller as fast as possible. As the

reigning deathmatch king in the vicinity I think I can safely say that

my reflexes were NOT the issue here, not after about 20 attempts by

myself about about 20 more by my brother. Either the tutorial does a

terrible job at conveying what it is you're supposed to do, or the game

is simply badly programmed. Either way, removed, gone, zip, zilch.

Unless some future patch seriously alters the control scheme I'm not

touching this game with a 60-foot pole. This only goes to prove my

theory that consoles are directly responsible for the lower quality of

PC titles today; not because of technology, not because of cost, but

simply because of shitty controls originating in consoles and

badly ported to the PC. Want a counter-example? Psychonauts has absolutely perfect controls, even on the PC.

- I spent about 25 minutes watching my brother play Shadow Of The Colossus

on the PlayStation 2. Graphically the game is very impressive, however

it tries to do a lot more than the aging PS2 platform can really

handle; I've seen framerates in the 10-15 range, which for a

straightforward console title is simply not cool. I haven't

actually played the game, but from watching my brother I can safely say

that the controls are either poor or difficult to get a handle of, but

a couple of days later my brother said that the real problem is simply

an ineffective tutorial and "it's really quite alright once you get

the hang of it." Gameplay-wise it didn't seem overly exciting, but I

may yet give it a shot at some point.

- After a couple of hours playing Five Magical Amulets

I believe I can safely conclude that, while I appreciate that it is a

labour of love and a lot of work went into making it, it's simply not a

good game. The plot is very simple and uninspired; some of the dialogs

are very poorly written; the quests are either too simple and easy to

figure out or simply make very little sense (minor spoiler: combining

the fly and the pitch made some sense, but the bag?!) and the graphics

are very amateur. The whole game is Kyrandia-esque

but without the high production values, although in its defense the music is actually quite

good. Bottom line: there are better independent games for sure. The White Chamber is one.

I gave OpenOffice.org 2.0 a spin today; I installed it, started up Writer, imported a >6MB Word document spanning well over 100 pages with lots of graphical content, edited huge chunks of it (including vast modifications, new pages, internal links, cuts and pastes from various sources etc.) and found it to be mostly superiour to Microsoft Word 2003.

For starters, the menus make a hell of a lot more sense than the obfuscated Word system of nested option dialogs. It was a lot easier to find stuff in the menus, and - much more importantly - everything worked from square one. I hardly had to touch the options (only to reassign Ctrl+K to "insert hyperlink" - an old habit from my Word days) and I could play the software like a finely tuned piano. I found it superiour to Word in many subtle ways: for example, the Navigator (F5 by default) turned out to be invaluable for said document (which is tightly hierarchical and very long and complex); linking was almost as good, requiring just one more keypress than Word for internal links within the document; formatting and reformatting was made a lot easier with HTML-like formatting options and built-in keybindings (such as Ctrl+0 for default formatting, which I found invaluable) and the whole shebang was rock-stable. Now that's what I call open source done right!

I did have a few gripes, obviously. For starters, I couldn't find a "Navigate Back" button anywhere (in Word I remapped it to Ctrl+-, in lieu of Visual Studio 2003) which I sourly miss. Believe it or not, the only other gripe I have is with the loading/saving system: the loading/saving times are considerably higher than Word, particularly for imported/exported documents (i.e. loading or saving a Word-format doc file). Word appears to implement some sort of delta-save mechanism, because when editing the same document with Word subsequent saves via Ctrl+S (which I do about twice a minute due to paranoia, same with Visual Studio 2003...) were considerably faster (sometimes 1-2 seconds instead of almost 10). However, remember that this is a huge document we're talking about - I've rarely edited 100-page documents, or even seen them being edited. Not in my profession anyway.

I've yet to give Calc and Impress a spin, but if Writer is anything to go by I expect to be blown away. Until Office 12 comes along I doubt I'll be firing up Word very often.

|